DEFORMABLE HAPTIC RENDERING FOR VOLUMETRIC MEDICAL IMAGE DATA

Virtual-reality-based surgical simulation is one of the most notable and practical applications of kinesthetic haptic rendering. The prospect of patient-specific simulation using pre-operative medical images drives the need for haptic rendering algorithms that allow direct manipulation of volumetric data. This project attempts to address rendering of deformable tissue within medical images. We have developed:

- A proxy-based haptic rendering algorthm that operates on volumetric data which allows for real-time deformation

- A process to generate a solid mesh from volumetric medical image data to drive a deformable body simulation.

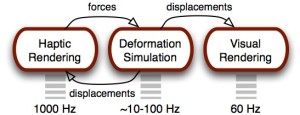

The three main architectural components within our application that enable visual and haptic rendering of deformable isosurfaces within volumetric data are shown below. Each subsystem executes a specific task with a unique rate requirement.

DATA REPRESENTATION

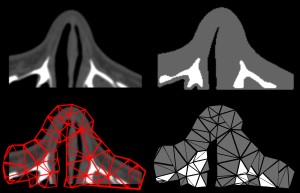

The medical image data (eg. CT or MR) is stored in memory in its original form, and haptic rendering, including collision detection and tracking of the proxy’s motion, is computed on the volume itself. Although a coarser tetrahedral mesh is used to drive the deformation, the fine geometric detail of the high-resolution image is seen and felt.

The solid mesh is generated by first segmenting the image into different tissue types via automatic thresholding. A Delaunay tetraheralization algorithm is applied on the segmented multi-material image domain to generate a mesh that encloses the original volume.

HAPTIC RENDERING

The haptic rendering approach is not tied to any specific method for deformable body simulation. The only requirement is that the simulation can accommodate external forces and compute nodal displacements of the mesh over time. We use a co-rotational linear finite element model provided by the Vega FEM library in our application.

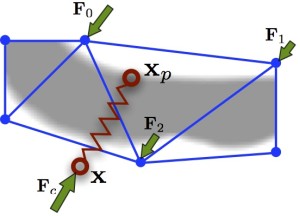

Interpolated volume sampling and central difference gradient estimation are used to define an implicit surface function. The surface normal (gradient) defines a tangential constraint plane on the proxy. As the proxy moves along the constraint plane, interval bisection on the surface function updates the proxy position and surface constraint.

Each node of the tetrahedral mesh is associated with a rest state position within the volume. Sampling of the deformed volume is done by locating the proxy’s enclosing tetrahedron, then using the point’s barycentric coordinate weighting to warp the proxy to its undeformed volume position. The virtual coupling force is rendered to the operator and also distributed to the mesh nodes by barycentric weighting.

RESULTS

Four computed tomography image sets were collected and used as test data. Computation times required for haptic rendering and deformation simulation were measured over ten second intervals of typical interaction, as shown below.

| Volume | Resolution | Elements | Haptic (ms) | Deform (ms) |

| Ear | 352×352×352 | 2372 | 0.029 | 17.0 |

| Nose | 192×192×192 | 2677 | 0.027 | 12.4 |

| Hand | 512×512×640 | 4679 | 0.050 | 101.0 |

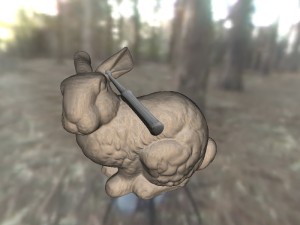

| Bunny | 512×512×534 | 1870 | 0.022 | 19.0 |

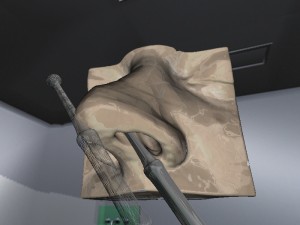

The method by which the deformable models were derived from pre-operative image data provided for very realistic deformable soft tissue behavior. Interaction remained stable with no pop-through even with a high degree of penetration resulting from large forces.

CONCLUSION

We have developed an approach for haptic rendering of deformable isosurfaces within volumetric medical image data. Our experiments have shown that the method is effective for a variety of anatomical structures. A physically-based deformation model coupled to high-resolution volume data allows for detailed visual and haptic display with realistic tissue behavior.