Evolution of Mechanical Fingerspelling Hands for People

who are Deaf-Blind

|

|

David L. Jaffe, MS

Department of Veterans Affairs

Palo Alto Health Care System

Rehabilitation Research and Development Center

3801 Miranda Ave., Mail Stop 153

Palo Alto, CA 94304

650/493-5000 ext 6-4480

650/493-4919 fax

Keywords:

Deaf-blindness, fingerspelling, communication devices

Abstract:

People who are both deaf and blind can experience extreme

social and informational isolation due to their inability to converse easily

with others. To communicate, many of these individuals employ a tactile version

of fingerspelling and/or sign language, gesture systems representing letters or

words, respectively. These methods are far from ideal, however, as they permit

interaction only with others who are in physical proximity, knowledgeable in

sign language or fingerspelling, and willing to engage in one of these

"hands-on-hands" communication techniques. The problem is further

exacerbated by the fatigue of the fingers, hands, and arms during prolonged

conversations.

Mechanical hands that fingerspell may offer a solution to

this communication situation. These devices can translate messages typed at a

keyboard in person-to-person communication, receive TDD (Telecommunication

Devices for the Deaf) telephone calls, and gain access to local and remote

computers and the information they contain.

Introduction:

Communication is such a natural and integral part of

one's daily activities that it is taken for granted. Today instantaneous

international communication is an affordable reality. For example, it is not

uncommon for a person with access to the Information Superhighway (Internet) to

retrieve information and post messages from/to a dozen different countries

during a single on-line session. Cellular telephones and telephones in

airplanes are the latest advances in mobile communication technology. These

systems, which permit communication with anyone, at any time, and from any

location, suggest that being without communication is unnatural and personally

limiting. Yet there are many people for whom interpersonal isolation is a way

of life. These are the estimated fifteen thousand deaf-blind men, women, and

children in this country who cannot even communicate with another person on the

opposite side of the same room, let alone with someone on the other side of the

world.

Usher's Syndrome:

The majority of adults who live with the dual sensory

loss of deafness and blindness have a disease called Usher's syndrome. It

manifests itself as deafness at birth, followed by a gradual loss of vision

commencing in the late teens or early twenties. Although Usher's syndrome

accounts for 15 percent of congenital deafness, it is usually not diagnosed

until the onset of the visual impairment, or even later. Unaware that special

education preparatory to visual loss may be in order, these children are

usually enrolled in programs for the deaf where they learn fingerspelling and

sign language (and/or lip reading and speech) in addition to reading print.

Because they are identified as deaf, Braille skills are not taught.

When loss of vision is superimposed on deafness (as

happens with Usher's syndrome), a major channel of receptive communication is

lost, usually resulting in an enormous social and informational void.

Alternate methods of communication:

Braille is a potential tool for relieving some of this

isolation. In addition to providing a system for reading, a mechanical

representation of braille has been incorporated in electronic aids such as the

Telebraille (a TDD with a 20-character mechanical braille display), to enable

deaf-blind individuals to receive information in both face-to-face and remote

communication situations. Learning Braille as an adult, however, is difficult.

The very act of learning to read Braille may be considered a final admission of

blindness.

Many deaf people use sign language which incorporates

more global movements and configurations of the hands and arms, as well as

facial expressions, to represent words and phrases. They supplement sign

language with "fingerspelling", a gesture system in which there is a

specific hand and finger orientation for each letter of the alphabet, to

communicate words for which there is no signed equivalent (such as proper

names).

Tactile fingerspelling and sign language:

A common communication technique used with and among

deaf-blind people is simply a hands-on version of fingerspelling and/or sign

language. Instead of receiving communication visually as deaf people do, the

deaf-blind person's hand (or hands) remain in contact with the hand (or hands)

of the person who is fingerspelling or signing. The full richness of the

motions present in sign language can not be conveyed in the tactile mode

required by a deaf- blind individual. Instead, each word of a message is

typically spelled out, one letter at a time with the fingerspelling technique.

(Although many Usher's Syndrome patients can speak intelligibly or use sign

language, others use fingerspelling for expressive communication as

well.)

Figure 1 - The Manual Fingerspelling Alphabet

While such tactile reception works fairly well for many

deaf-blind people, it does have significant drawbacks. Since very few people

are skilled in these manual communication techniques, there are very few people

with whom to "talk". The need for interpreters poses still other

problems: locating, procuring, and paying for the interpreter service. A

problem unique to deaf- blind individuals is that many interpreters are

accustomed to being "read" visually by deaf clients and may not be

comfortable with the physical restrictions involved in signing while another

person's hands are touching theirs. In addition, the rapid fatigue resulting

from these tactile methods often requires two interpreters so that a break may

be taken from continuous fingerspelling or signing . The need for an

interpreter may also intrude on the deaf-blind individual's privacy and place

him/her in an extremely dependent situation due to the complete reliance on an

interpreter for any communication.

Method: The first robotic fingerspelling

hand

In an attempt to alleviate these problems, the SouthWest

Research Institute (SWRI) in San Antonio, conceived and developed a mechanical

fingerspelling hand in 1977 [1]. This early device demonstrated the feasibility

of transmitting linguistic information to deaf-blind people by typing messages

on a keyboard connected through electrical logic circuitry to the mechanical

hand. The hand responded by forming the corresponding letters of the one- hand

manual alphabet. To receive the information, the deaf-blind user placed his/her

hand over the mechanical one to feel the finger positions, just as he/she would

with a human fingerspelling interpreter. This system finally enabled deaf-blind

people to receive communications from more than a few select individuals;

anyone who could use a keyboard could express themself to the deaf-blind person

through the mechanical fingerspelling hand.

Result: The first robotic fingerspelling

hand

While the SWRI system demonstrated the concept's

feasibility, it had many technical shortcomings: not all of the letters could

be properly formed, it operated slower than a human interpreter, and the

fluidity of motion which seemed to greatly enhance the intelligibility of

receptive tactile fingerspelling was lacking. In addition, any changes in

timing or how the hand formed the letters had to be achieved through mechanical

alterations of the hardware. This limited the device's flexibility as a

research tool.

Method: Dexter

In 1985, the Rehabilitation Engineering

Center of The Smith-Kettlewell Eye Research Foundation sponsored a class

project conducted by four graduate students in the Department of Mechanical

Engineering at Stanford University to design and fabricate an improved

state-of-the-art fingerspelling hand [2, 3, 4, 5, 6, 7]. Its major goal was to

develop a system with improved timing and easily modifiable finger positions.

These qualities were realized in a new robotic fingerspelling hand named

"Dexter."

|

Figure 2 - Dexter

Fingerspelling Hand

|

Dexter's mechanical hardware:

Dexter looked like a mechanical version of a rather large

human hand projecting vertically out of a box. The four machined aluminum

fingers and a thumb were joined together at its palm. All digits operated

independently of each other and had a range of motion comparable to human

fingers. The thumb was jointed so as to allow it to both sweep across the palm

as well as move in a plane perpendicular to it. A pneumatic rotary actuator

allowed the palm to pivot in a rotary fashion around a vertical steel rod much

the way a human hand can pivot from the wrist - except that a full 180 degrees

could be achieved by Dexter.

All Dexter's finger and thumb motions were actuated by

drive cables. Pneumatic cylinders pulled these cables which flexed the

individual fingers and thumb, while spring-driven return cables extended the

fingers. The cylinders, in turn, were activated by air pressure controlled by

electrically operated valves. These valves were controlled by a microcomputer

system. The actuating equipment and valves were housed in two separate

assemblies below the hand.

Dexter's computer hardware:

The original student design was based on an Intel 8085

STD-bus "target system" used in ME218 (Smart Product Design Course)

at Stanford. It consisted of the 8085 microcomputer, Forth programming

language, memory, and counter/timer support. The timer generated the signals

that determined the rate of hand motion and how long each finger position was

to be held. The additional circuitry needed to control Dexter was fabricated on

an STD card which plugged into the target system card cage. A single external

12 volt power supply activated the 22 valves under computer control. Digital

output port latches received data from the CPU, while Darlington power

transistors provided sufficient current to activate the electrically controlled

valves. Letters to be displayed on the hand were entered on an IBM-PC

computer's keyboard which was connected by a serial link to the target

hardware.

Dexter's hardware was subsequently revised at the

Rehabilitation Research and Development Center (RR&D) to consist of a Z80

microprocessor card, two medium-power driver cards, and a high-current DC power

supply all housed in an STD bus card cage. The CPU card itself contained

counter-timers, memory, and serial interfaces. Commercial medium-power DC

driver cards replaced the student-built wire-wrapped version and the power

supply for operating the pneumatic valves was included within the STD chassis.

An Epson HX-20 laptop computer's keyboard and display were employed to

communicate user messages over a serial link to the self-contained target

system.

Dexter's software:

The Forth programming language was chosen because: 1) its

design cycle is approximately eight times shorter than assembly language, 2) it

is an interactive and compact high-level language that can employ assembly

language for critical timing and interrupt service routines, 3) it uses a

standard host computer connected by a serial port to the target hardware for

development, and 4) the application program can be stored in non-volatile

memory after it is fully tested.

The student-designed Forth software was substantially

updated by RR&D to 1) execute from non-volatile memory, 2) accommodate

menu-driven alteration of critical parameters such as timing variables, 3)

allow new characters typed on the keyboard to be accepted while previous ones

were being fingerspelled, and 4) incorporate both modem and serial input of

characters.

Dexter's operation:

The microcomputer and its associated software controlled

the opening and closing of the bank of valves which directed air pressure to

specific pneumatic cylinders which pulled on the drive cables which were the

"tendons" of the fingers. As a message was typed on a keyboard, each

letter's ASCII value was used by the software as a pointer into an array of

stored valve control values. The states (open or closed) of all 22 valves were

specified by three bytes. Two to six valve operations, each separated by a

programmed pause, were needed to specify the finger movements corresponding to

a single letter. The hand could produce approximately two letters per second,

each starting from and returning to a partially flexed neutral position.

An additional bit in the valve control byte triad was

used by the software to determine whether the current finger position was an

intermediate or final letter position. Different programmed pause times were

associated with each of these two situations.

Although the mechanical hand could not exactly mimic the

human hand in fingerspelling all the letters (such as the special wrist and arm

motions required in J and Z), the fact that Dexter always produced the same

motions for a given letter was an important factor influencing its

intelligibility. The inter-letter neutral position was another unnatural

feature of the design that did not accurately reflect human fingerspelling and

limited the speed of letter presentation. Despite these shortcomings, users of

Dexter had little difficulty in accommodating to it.

Result: Testing Dexter

Deaf-blind clients of Lions Blind Center (Oakland, CA)

served as subjects for the initial testing of Dexter. They were able to

identify most of the letters presented by the robotic hand without any

instructions, and in less than an hour were correctly interpreting sentences.

Equally important was their positive emotional reaction to the hand. They

seemed to really enjoy using it and seemed to be intrigued by its novelty.

There were no negative comments made concerning its mechanical nature or any

other aspect of the system.

Method: Dexter-II

Dexter-II was built by a second Stanford

student team in 1988 as a second- generation computer-operated

electro-mechanical fingerspelling hand [8, 9]. This device, like its

predecessor, translated incoming serial ASCII (a computer code representing the

letters and numbers) text into movements of a mechanical hand. Dexter-II's

finger movements were felt by the deaf-blind user and interpreted as the

fingerspelling equivalents of the letters that comprise a message.

Dexter-II was approximately one-tenth the volume of the

original Dexter mechanical system. It was designed by three Stanford graduate

mechanical engineering students and employed DC servo motors to pull the drive

cables of a redesigned hand, thereby eliminating the need for a supply of

compressed gas. A speed of approximately four letters per second, double that

of the original design, could be achieved with the improved design.

|

Figure 3 - Dexter-II

Fingerspelling Hand

|

Mechanically, the hand (a right hand the size of a 10

year old) was oriented vertically on top of a enclosure housing the motors.

Each finger could flex independently. In addition, the first finger could move

away from the other three fingers in the plane of the hand (abduction). The

thumb could move out of the plane of the palm (opposition). Finally, the wrist

could flex. Each hand motion was driven by its own servo motor connected to a

pulley. Wire cables anchored at the hand's fingertips and wound around pulleys

served as the finger's "tendons". As the motor shafts were powered,

they turned the pulleys, pulling the cables, to flex the fingers. Torsion

springs at the "knuckles" separating the Delrin finger segments

provided the force to straighten the fingers when the motors released tension

on the cables.

Dexter-II's computer used the STD-bus enclosure, Z80

microprocessor card, and Epson HX-20 computer from Dexter. Two commercial

counter timer cards replaced the medium-power driver cards and were used to

produce the pulse- width modulated waveforms required to control the DC servo

motors. In operation, a message was typed on a keyboard (the Epson HX-20) by an

able- bodied person. Each letter's ASCII value was used by Dexter-II's computer

software to access a memory array of stored control values. This data stream

programmed the pulse-width modulation chips to operate the eight servos and

flex the fingers. The resulting coordinated finger movements and hand positions

were felt by the deaf-blind communicator and interpreted as letters of a

message.

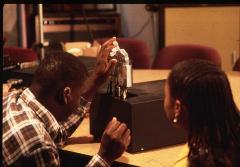

Result: Testing Dexter-II

Dexter-II was first tested by a deaf-blind woman who is

extremely proficient at "reading" tactile fingerspelling. She

provided many suggestions for improving Dexter-II's letter-shape

configurations. Later, it was introduced to twelve deaf-blind people during an

annual retreat in Sacramento.

In June of 1989, about twenty deaf-blind attendees at the

annual Deaf-Blind Conference in Colorado Springs had a chance to experience

Dexter-II. The device was also exhibited at the 1989 RESNA Conference in New

Orleans and at the InvenTech meeting in Anaheim, CA in September, 1989. In all

cases, the deaf-blind individuals' ability to initially understand Dexter-II

varied considerably. Some were able to understand Dexter-II immediately, while

others had trouble translating a few letters.

Although neither Dexter nor Dexter-II could exactly mimic

human hand movements in fingerspelling all the letters, they were able to

display close approximations that have proven to be easy to learn by deaf-blind

users. An advantage of Dexter-II's mechanical system was that it always

produced the same motions for a given letter - an important factor in

recognizing its fingerspelling "accent".

Method: Fingerspelling hand for Gallaudet

In 1992, Gallaudet University

(Washington, DC), with funding from NIDRR, contracted for the design and

construction of two third generation fingerspelling hands for clinical

evaluation [10, 11]. These units were to be smaller, lighter, and more

intelligible than previous designs. The effort involved two facilities:

RR&D Center designed the new mechanical and computer systems, while the

Applied Science and Engineering Laboratories (ASEL) (Wilmington, DE) provided

critical hand position data and addressed telephone interface access

issues.

|

Figure 4 - Fingerspelling Hand for

Gallaudet

|

Fingerspelling hand for Gallaudet mechanical

design:

In this design, as in Dexter-II, each hand motion was

driven by a servo motor connected to a pulley. A cable was wound around the

pulley, routed up the finger, and attached to its tip. The fingers themselves

were constructed of Delrin segments attached to each other by a strip of carbon

fiber. The carbon fiber provided the flexible hinge and restoring force

necessary to extend the finger. When the motor shaft and pulley rotated, the

cable was pulled and the finger flexed. When the motor shaft rotated in the

other direction, the tension on the cable was released and the finger

straightened.

Fingerspelling hand for Gallaudet computer hardware

design:

A Z180 SmartBlock (Z-World, Davis, CA) 8 bit

microcontroller accepted RS232 serial data and choreographed hand motion by

controlling eight DC servo motors. The controller itself was compact enough to

be packaged within the hand's enclosure.

Fingerspelling hand for Gallaudet computer software

design:

The Forth programming language provided a simple user

interface, allowed modifications to the finger positions, and permitted the

system parameters to be altered. Fingerspelling movements in this version were

much more fluid due to the elimination of the inter-letter neutral position.

The software includes two finger position tables for each letter pair. One

table is permanently installed, while the other can be altered using a built-in

editor. This facility permits movements to be changed to enhance a particular

letter pair's readability. Fingerspelling speed was adjusted by a six position

rotary switch. The first five positions selected increasing speeds by altering

the length of the pauses between letters, while the final position offered a

programmable speed.

Result: Fingerspelling hand for Gallaudet testing and

evaluation

ASEL tested and configured the fingerspelling hands for

use with TDDs. The letters were further optimized for improved recognition.

Nine deaf-blind people tested the hands over a two month period at Gallaudet.

One individual was able to interpret all ten simple sentences without error.

Other users were able to understand 70% of the sentences. Comparable

performance was achieved with single isolated letters. The users identified

confusing letter combinations and suggested improvements for a commercial

prototype [12].

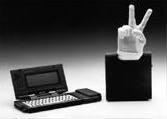

Method: Ralph

A fourth generation fingerspelling hand

called Ralph (for Robotic ALPHabet) has been constructed by RR&D to serve

as a basis for technology transfer and commercialization. Specifically, this

design implemented an improved mechanical system.

Ralph's mechanical design:

Ralph's design is similar to the hands designed for

Gallaudet. In this device, however, a new mechanical system replaces the

pulleys and carbon fiber strips. Each hand motion is driven by a servo motor

connected by a lever arm to a rod. The rods push and pull a system of linkages

that flexes the individual fingers and the wrist. The elimination of the

pulleys makes Ralph more compact and able to fingerspell faster. Its fingers

are actively extended, unlike Dexter-II or the Gallaudet hands.

|

Figure 5 - Ralph

Fingerspelling Hand

|

Ralph's computer design:

Ralph utilizes the same computer hardware as its

predecessor and its Forth software is organized in 17 modules. These modules

provide enhancements for the Forth kernel, an assembler (including routines

that implement special I/O instructions used by the microcontroller), an ANSI

display driver, serial port utilities, timer port utilities, clock utilities,

servo motor pulse width modulation generation, speed switch driver, interrupt

routines, input buffer software, timing control, hand data storage,

fingerspelling algorithm, hand position editing support, and the user

interface. As with the Gallaudet hand, Ralph's software provides a menu-driven

user interface, allows the finger positions to be edited, and permits

alteration of system parameters.

Ralph's operation:

Any device that produces RS232 serial data, including

terminals, modems, computers, OCR scanners, speech recognizers, or modified

closed caption systems, could be used to control Ralph. The user interface is

implemented as a menu system which provides easy access to the unit's various

functions including displaying and setting the microcontroller's parameters,

testing the hand motions, editing hand position data, and entering letters to

be fingerspelled.

In the fingerspelling mode, keypresses are entered on the

keyboard. The hand's software translates these keypresses into commands for the

DC servo motors. As the motor shafts rotate, they push/pull on the rods that

connect to the fingers' mechanical linkages. It is by this coordinated series

of motor commands that keyboard input is transformed into choreographed motion

representing fingerspelling.

The mechanical system provides sufficient torque to move

the fingers against the resistance of a user's hand, but not enough to cause

any pain or injury if the user's finger happen to get caught under Ralph's. The

servo motors simply stall when their torque limit is exceeded.

Result: Ralph's testing and evaluation

Over a two-day period at ASEL, Ralph was evaluated for

approximately 8 hours by two individuals. One was familiar with the Gallaudet

fingerspelling hand and was completely deaf and blind. The other had not used

mechanical fingerspelling hands before and had some residual sight and hearing

capabilities.

They both reported trouble with some letters, but the

first user was able to correctly identify isolated characters over 75% of the

time.

When short sentences were presented, the experienced user

completely understood approximately 75% of the sentences. Furthermore, in each

of the failures, after a second presentation, the sentence was either

understood in its entirety or just one key word was misidentified.

Additionally, both users appreciated the new rounded

design of Ralph's finger segments because they felt more natural than the hands

built for Gallaudet.

Discussion: Technology transfer

Beyond evaluation, technology transfer issues must be

addressed. The market for fingerspelling hands needs to be assessed. A

collaborative effort with a manufacturer will be required to move this device

out of the laboratory and into the hands of deaf-blind people. A potential

manufacturer has been found in southern California. Current plans involve

pursuing Small Business Innovative Research (SBIR) funding for technology

transfer and the additional design changes to address remaining concerns

identified during Gallaudet's evaluation.

A potential solution exists for the provision of

fingerspelling hands to deaf-blind people. Within California (and some other

states), all telephone subscribers support a fund which provides telephone

access equipment for persons with disabilities. Under this program, approved

commercial versions of this fingerspelling hand could be furnished at no charge

to deaf-blind people.

Research has also been done on a "Talking

Glove" [13]. This device consisted of an instrumented glove worn by a

deaf-blind person that sensed the flex of each finger. Its pattern matching

software translated the wearer's fingerspelling gestures into letters which

could be displayed or vocalized by a speech synthesizer. This system could

provide an expressive communication channel for Ralph's users.

Conclusion:

Ralph was intended to serve deaf-blind users as a

complete receptive communication system, not just a means of receiving

information in face-to- face situations. Its ability to respond to computer

input means it can be interfaced to a TDD to provide deaf-blind people with

telephone communication. It can also be connected to computers to provide

improved vocational and avocational potential to the deaf-blind

community.

All encounters with Ralph and previous fingerspelling

hands have been enthusiastic, positive, and at times, highly emotional. The

increased communication capability and ability to "talk" directly

with people other than interpreters are powerful motivations for using

fingerspelling hands. They have the potential to provide deaf-blind users with

untiring personal communication at rates approaching that of a human

interpreter.

A commercially available product may help alleviate some

of the extreme isolation experienced by people who are deaf and blind. It is a

device which performs a worthy task - that of enabling human beings to

communicate with each other.

References:

| 1. |

Laenger, Charles J., Sr.; and Peel, H.

Herbert, `Further Development and Tests of an Artificial Hand for Communication

With Deaf-Blind People', Southwest Research Institute, March 31,

1978. |

2. |

Danssaert, John; Greenstein, Alan; Lee, Patricia; and Meade, Alex, `A

Finger-Spelling Hand for the Deaf-Blind, ME210 Final Report', Stanford

University, June 10, 1985. |

3. |

Gilden, Deborah, `A Robotic Hand as a Communication Aid for the Deaf- Blind',

Proceedings of the Twentieth Hawaii International Conference on System

Sciences, 1987. |

4. |

Gilden, Deborah and Jaffe, David L., `Dexter - A Mechanical Finger- Spelling

Hand for the Deaf-Blind', Rehabilitation Research and Development Center

Progress Reports, 1986. |

5. |

Gilden, Deborah and Jaffe, David L., `Dexter - A Helping Hand for Communicating

with the Deaf-Blind', Ninth Annual RESNA Conference, Minneapolis, MN,

1986. |

6. |

Jaffe, David L., `Speaking in Hands', SOMA: Engineering for the Human Body,

Volume 2, Number 3, October, 1987, pp 6-13. |

7. |

Gilden, Deborah and Jaffe, David L., `Dexter - a Robotic Hand Communication Aid

for the Deaf-Blind', International Journal of Rehabilitation Research, 1988, II

(2), pp198-199. |

8. |

Jaffe, David L., `Dexter II - The Next Generation Mechanical Fingerspelling

Hand for Deaf-Blind Persons', Proceedings of the 12th Annual RESNA Conference,

New Orleans, June, 1989. |

9. |

Jaffe, David L., `Dexter - A Fingerspelling Hand', OnCenter - Technology

Transfer News, Volume 1, Number 1, June, 1989. |

10. |

Jaffe, David L., `Third Generation Fingerspelling Hand', Proceedings of the

Technology and Persons with Disabilities Conference, Los Angeles, CA, March,

1993. |

11. |

Jaffe, David L., `The Development of a Third Generation Fingerspelling Hand',

16th Annual RESNA Conference, Las Vegas, NV, June, 1993. |

12. |

Harkins, Judith E., Korres, Ellie, and Jensema, Carl J., `Final Report - A

Robotic Fingerspelling Hand for Communication and Access to Text for Deaf-Blind

People', Gallaudet University, 1993. |

13. |

Kramer, James and Leifer, Larry, `An Expressive and Receptive Communication Aid

for the Deaf, Deaf-Blind, and Nonvocal', Proceedings of the Annual Conference,

IEEE Engineering in Medicine and Biology Society, Boston, 1987. |

Other references:

Ouellette, S., `Deaf-blind Population Estimates', In D.

Watson, S. Barrett, and R. Brown (Eds.), A Model Service Delivery System for

Deaf-Blind Persons. Little Rock, AK: University of Arkansas, Rehabilitation

Research and Training Center on Deafness/Hearing Impairment., (not

dated).

Reed, C.M., Delhorne, L.A., Durlach, N.I., and Fischer,

S.D., `A Study of the Tactual and Visual Reception of Fingerspelling', Journal

of Speech and Hearing Research, 33, 786-797.

Funding:

Dexter's design and development was conducted under

DOE-NIHR Grant No. G008005054, with assistance from The Smith-Kettlewell Eye

Research Foundation.

Dexter II was developed with VA RR&D Core

funding.

Gallaudet Hand support was provided by NIDRR (Project

H133G80189-90) under contract to PAIRE (Palo Alto Institute for Research and

Education) and supervised by Gallaudet University.

Ralph was supported by VA RR&D Core funds through its

Technology Transfer Section.

Acknowledgements:

The following people and facilities were involved in the

evolution of mechanical fingerspelling hands. This effort would not have been

possible without their ideas, energy, and commitment.

- Rehabilitation Research and Development Center

- David L. Jaffe

- Douglas F. Schwandt

- James H. Anderson

- Stanford student group - Dexter

- John Danssaert

- Alan Greenstein

- Patricia Lee

- Alex Meade

- Stanford student group - Dexter II

- David Fleming

- Gregory Walker

- Sheryl Horn

- Smith-Kettlewell Eye Research

- Deborah Gilden

- Gallaudet University

- Judith E. Harkins

- Ellie Korres

- Carl J. Jensema

- Applied Science and Engineering Laboratories

- Richard Foulds

- William Harwin

- Timothy Gove

- Palo Alto Institute for Research and Education

- David D. Thomas

- University of Capetown Medical Center

- David A. Boonzaier

|