Digital Image Processing

Home

Class Information

Class Schedule

Handouts

Projects Win 2018/19

Projects Win 2017/18

Projects Aut 2016/17

Projects Aut 2015/16

Projects Spr 2014/15

Projects Spr 2013/14

Projects Win 2013/14

Projects Aut 2013/14

Projects Spr 2012/13

Projects Spr 2011/12

Projects Spr 2010/11

Projects Spr 2009/10

Projects Spr 2007/08

Projects Spr 2006/07

Projects Spr 2005/06

Projects Spr 2003/04

Projects Spr 2002/03

Test Images

MATLAB Tutorials

Android Tutorials

SCIEN

BIMI

Final Project for Spring 2006-2007

Pictures at an Exhibition

The project should be done individually or in groups of up to 3 people and should require about 50 hours per person. Each group will develop and implement their own algorithm to identify a painting from an image captured by a cell-phone camera.

INTRODUCTION

DOWNLOADS

INSTRUCTIONS

DEADLINES

PROJECT SUBMISSION

The project should be done individually or in groups of up to 3 people and should require about 50 hours per person. Each group will develop and implement their own algorithm. The task of the project is to develop a technique which recognizes paintings on display in the Cantor Arts Center based on snapshots taken with a camera-phone. Such a scheme would be useful as part of an electronic museum guide; the user would point his camera-phone at a painting of interest and would hear commentary based on the recognition result. Applications of this kind are usually referred to as "augmented reality" applications. Implemented on hand-held mobile devices, they are called "mobile augmented reality." We are interested in the image processing part of the problem.

Many different image processing techniques may succeed in recognizing one painting from a relatively small set. You may use any algorithm that you learned in class, as well as algorithms that we have not discussed. However, solutions will probably require a combination of several schemes.

You will design and test your algorithm with a set of training images and the corresponding ground truth data that can be downloaded from the Downloads section. After you submit your algorithm implementation, we will check its performance with a set of test images, which are not in the training set. Each test image shows one of the paintings in the training set, but taken at a different time and from a different vantage point. You may assume that the variations among the training images are typical.

Painting Photographs Details:

The photographs in the training and test sets were all taken in the European Gallery of the Cantor Arts Center. At least four photos were taken of each of 33 paintings. The photos were taken with a Nokia N93. Three photos of each painting have been randomly selected from the database for you to use as training images. The museum environment presents several challenges due to low-light conditions. The images are quite noisy, and many have motion blur and/or defocus. Nevertheless, the 3 megapixel images provide a relatively high resolution for cell-phone photos.

Training Images and Ground Truth Data

Training Images: Gallery or ZIP file

- The training images are regular JPEG images.

- The images can be read by imread( ) in MATLAB.

Ground Truth: ground_truth.m

- The ground truth data are stored in a MATLAB script file.

- Read the header comments of the m-file for more info.

-

Your algorithm has to output the title of the painting in a given image.

Specifically, the interface between your painting identification function and the evaluation routine is specified in MATLAB notation as follows,

function painting_title = identify_painting(img)

The input and output are defined as follows,

img: height-by-width-by-3 uint8 array representing a 24-bit RGB image obtained through imread( ).

painting_title: A character array indicating the title of the dominant painting contained in the input image. This must exactly match one of the strings given in ground_truth.m as evaluated by strcmp( ). -

You may use any MATLAB built-in function or MATLAB image processing toolbox function. You may NOT use other libraries or code other than your own. However, you may re-implement any library that you wish.

-

The performance metric

For a single input image:

Score = 1 if identification is correct

0 otherwiseFor multiple images:

Your program will be run on 10 images from our test set. Each test image will have a painting from the training set. The total score is the sum of the individual images scores.

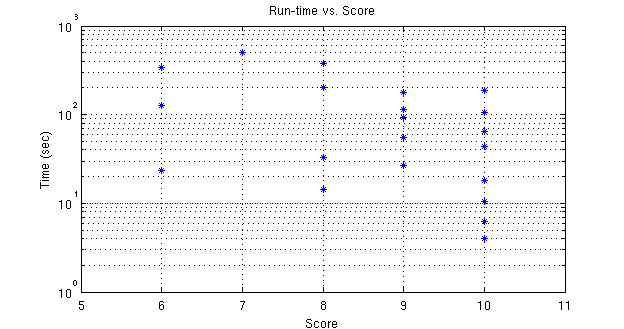

Tie breaker:

Programs with the same score will be ranked according to run-time.

-

The maximum run time for a single image is 1 minute. We will abort your program if it takes longer.

-

To register your group, please send an e-mail to ee368-spr0607-staff@lists.stanford.edu with the subject "group registration for project".

Group registration: 11:59 PM, May 12

Program submission: 11:59 PM, June 1

Report submission: 11:59 PM, June 2

No extensions will be given.

(1) Please mail to ee368-spr0607-staff@lists.stanford.edu (do not c.c. to TA's or Professor's personal e-mail address).

(2) For program, please include ONLY nessessary files in ONE ZIP file named "ee368groupXX.zip" and email it with subject "ee368 groupXX program". Your 2 digit group number XX is listed below.

(3) For report, please prepare in PDF

format, name it "ee368groupXX.pdf" and email it with subject "ee368 groupXX

report".

Your 2 digit group number XX is listed below.

Please follow the IEEE two-column format for the project report.

Templates (both Word and LaTeX) are available from the websites of several IEEE conferences. You can take a look at some reports from last year to get a general idea about the format. Please describe your algorithm precisely and concisely and make sure it is easy to read and grasp.

(4) Note that your code will be run on the SCIEN machines by the teaching staff. Hence, it is a good idea to get an account there and make sure that there are no platform-specific problems with your code.

(5) If you are a group, then you need to submit the log of who did what with your report.

Group 01: Prashant Goyal, Vikram Srivastava

(report)

Group 02: Vincent Gire, Sharareh Noorbaloochi

(report)

Group 03: Chris Tsai, June Zhang, Ivan Janatra

(report)

Group 04: Gabe Hoffman, Peter Kimball, Steve Russell

(report)

Group 05: Eric Chu, Erin Hsu, Sandy Yu

(report)

Group 06: Catie Chang, Mary Etezadi-Amoli, Michelle Hewlett

(report)

Group 07: David Lau, Qun Feng Tan

(report)

Group 08: Karen Zhu, Stephanie Kwan

(report)

Group 09: HyukJoon Kwon, Jongduk Baek

(report)

Group 10: Eva Enns, Shahar Yuval, Mudit Garg

(report)

Group 11: Choudhury,Rahul

(report)

Group 12: Do,Hyungrok

(report)

Group 13: Dunna,Pradeep Kumar

(report)

Group 14: Bolouki,Sara

(report)

Group 15: Hsiao,Roger

(report)

Group 17: Kamat,Mithun Ramdas

(report)

Group 19: Lin,Eric Calvin

(report)

Group 20: Mattingley,Jacob Elliot

(report)

Group 21: Petkov,Atanas Yoshkov

(report)

Group 22: Skare,Travis Michael

(report)

Group 23: Su,Han-I

(report)

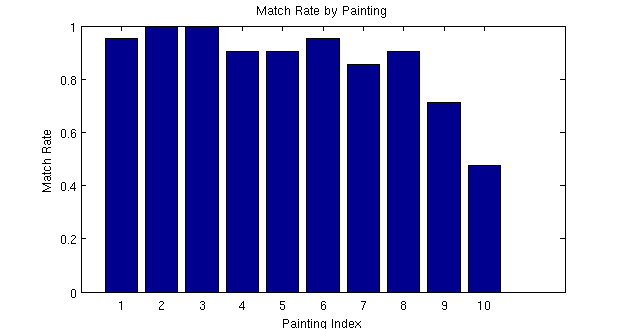

01.jpg still_life_with_crab

02.jpg education_of_the_virgin

03.jpg landscape_with_ruins

04.jpg landscape_with_adam_and_eve

05.jpg vesuvius

06.jpg musical_party

07.jpg prison_of_saint-lazare

08.jpg deposition

09.jpg schloss_angenstien

10.jpg adam_and_eve

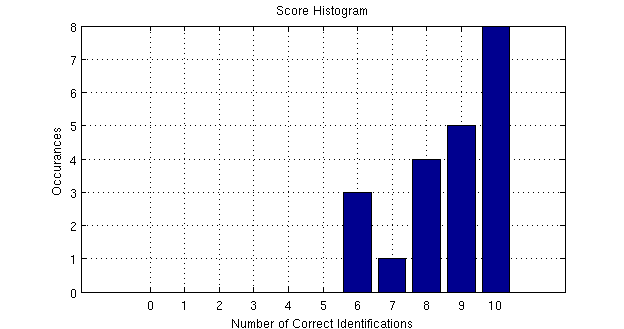

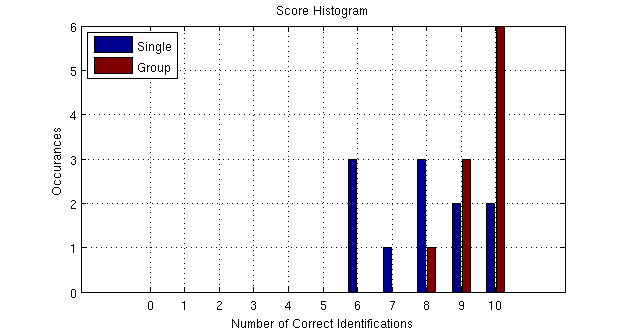

Score Distributions